1. Introduction

As a crucial part,engine performance has great influence on the vehicle. The engine fault rate always ranks first among the vehicle components because of its complex structure and the running conditions. Accordingly, how to detect engine problems is of importance for vehicle inspection and maintenance in automotive workshops. So the development of an expert system for engine diagnosis for the automotive workshop is currently an active research topic. Traditionally, the description of the engine faulty symptom in the automotive workshop is just existence or not. However, this description cannot lead to a high diagnosis performance because the symptom always appearsin different degrees instead of existence or not. Moreover, the engine fault is sometimes a multiple fault problem, so the occurrence of the engine fault should also be represented as probability instead of binary or fuzzy values. In addition, the relationship between faults and symptoms is a complex nonlinearity. In view of the natures of the above problems, an advanced expert system for engine diagnosis in automotive workshops should consider fuzzy logic and probabilistic fault classifier to quantify the degreesof symptoms anddetermine the possibilities of multiplefaults respectively. By fuzzy logic technique, the symptomsare fuzzified into fuzzy value and then based on the values, the diagnosis is carried out. By going through multi-fault classification, the output of the diagnostic systemis then defuzzified into fault labels.

Recently, many modeling/classification methods combined with fuzzy logic have been developed to model the nonlinear relationship between symptoms and engine faults. In 2003, Fuzzy Neural Network (FNN) was proposed to detect diesel engine faults [1]. Vonget.al, [2,3] applied multi-class support vector machine (SVM) and probabilistic SVM for engine ignition system diagnosis based on signal patterns, however the signal-based method is not considered in this study because it is difficult to apply to automotive workshops. In reference [4], Fuzzy Support Vector Machines (FSVM) was proposed and put forward to classify complex patterns; it is believed that the FSVM technique can also be applied to fault diagnosis problems.

Both FNN and FSVM have their own limitations. For FNN, firstly, the construction of FNN is so complex (involving number of hidden neurons and layers, andtrigger functions, etc) that the choice of themis difficult. Improper selection will result in a poor performance. Secondly, the network model depends on the training data, thus, if the data is not large enough, the model will be inaccurate, but if it is excessive, which causes over fitting problem, then the FNN model will be inaccurate either. Asfor FSVM, it suffers from solving the hyperparameters. There are two hyperparameters (σ, c) for user adjustment.These parameters constitute a very large combination of values and the user has to spend a lot of effort to determine the parameters.

Recently, an improved statistical method based on extreme learning machine, namely, sparse Bayesian extreme learning machine (SBELM) was developed to deal with the aboveproblemsin classification [5]. SBELM is a probabilistic classifier. SBELM inherits the fast training time from extreme learning machine and the sparsity of weights, which prunes the number of corresponding hidden neurons to minimum, from the sparse Bayesian learning approach. It is believed that the fast training time and the property of sparsity can enable SBELM to effectively deal with big data point problems. Besides, SBELM can let the user easily define its architecture because the classification accuracy of SBELM is insensitive to its hyperparameter, number of hidden nodes (L), as long as L is over 49 [5], whereas FPNN and FPSVM do not have this attractive feature. As a result, SBELM is selected as a training algorithm for building the probabilistic classifier in this study. Moreover, there is no research applying fuzzy logic to SBELMforany diagnosis problems yet. So a promising avenue of research is to apply fuzzy logic to SBELMforcar engine multiple-fault diagnosis.

In this paper, a new framework of fuzzy sparse Bayesian extreme learning machine (FSBELM) is proposed for fault diagnosis of car engines. Firstly, fuzzy logic gives the memberships of the symptoms depending on their degrees. Then, SBELM is employed to construct some probabilistic diagnostic modelsor classifiers based on the memberships. Finally, a decision threshold is employed to defuzzify the output probabilitiesof the diagnostic models to be decision values.

Because of multiple fault problems, standard evaluation criterion, exact match error, is not the most suitable performance measure as it does not count partial matches. Hence F-measure isconsidered in this paper to evaluate the diagnostic performance because it is a partial matching scheme.

2. System Design

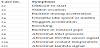

Depending on domain analysis, the typical symptoms and car engine faults are listed in Tables1 and 2, respectively. Table3 shows the relationship betweenthe symptoms and the engine faults. If one engine expressesthe ith symptom, then xiis set as 1, otherwise it is set as 0. In a similar manner, if one engine is diagnosed with the jth fault, then yjis set as 1, otherwise it will be set as 0. Hereby, the symptoms of one engine could be expressed as a vector x=[x1, x2,…, x11]. Similarly, the faults of an engine are also expressed as a binary vector y=[y1, y2,…, y11].

2.1 Fuzzification of input symptoms

Practically, the car engine symptoms have some degrees of uncertainties. Hence fuzzy logic is applied to represent these uncertainties. The fuzzy set in the fuzzy logiccan be expressed as follows:

Assuming universe A={x1, x2,…, xn},

In Eq. (1), μA(xi)/xi represents the correspondence between the membership μA(xi) and the element xi, but not the mathematical relationship. μA(xi) Є [0,1] and it reflects the degree of xi belonging to A.

Depending on the domain knowledge, various membership functions of the symptoms are defined as follows:

For example, if the symptoms of one engine are given below:

- Able to crank but cannot start;

- Stall;

- Sometimes backfire during acceleration;

- Normal acceleration;

- Slight knock;

- Always backfire;

- Inlet pressure below 0.01MPa;

- 0%~1% above the normal throttle sensor signal;

- Coolant temperature is above 100°C or below 70°C;

- Lambda signal is between 0.3V and 0.7V

The membership vector of this car engine can then be written as s= [0.7,1,0.5,0.3,0,0.5,1,1,0.5,1,0]. This is how the fuzzy logic is executed.

3. Fuzzy Sparse Bayesian Extreme Learning Machine

Fuzzy sparse Bayesian extreme learning machine is defined as SBELM with fuzzified input. As the fuzzification of the input is presented in Section 2, this section introduces SBELM only.

Different from extreme learning machine thatcalculates the inverse of matrix hidden layer output H [6,7], SBELM employs the Bayesian mechanism to learn the output weights w. Given a training dataset (si, ti) of N cases for a d-class problem for i= 1 to N wheresi is the fuzzified input vector and ti is the corresponding label of si. Then, the input for SBELM is the hidden layer outputs H, in which H=[h1(s1),… ,h1(sN)] TЄ RN×(L+1) and hi(si)=[1, g1 (θ1∙si+b1),… ,gL(θL∙si+bL)],where g(.) is activation function of hidden layer, θ is weight vector connecting the hidden and input nodes, b is the threshold of the hidden node. For two-class classification, every training sample can be considered as an independent Bernoulli event P(t\s). The likelihood is expressed as:

where σ(.) is sigmoid function

There always exists an independent ai associated with each wi; some values of wi is to be zero when ai tends to infinity. The value of hyperparameter a are calculated by maximizing the marginal likelihood by integrating the weight parameters w.

However, Eq. (16) cannot be directly integrated out. To solve

this problem, ARD approximates a Guass for it with Laplace

approximation approach, such that

Where

where y=[y1,y2,…,yN]T, A=diag(a), B=diag(β1,β2,…,βN) is a diagonal matrix with βi=yi(1-yi). The center wMP and covariance matrix Σ of Gauss distribution over w by Laplace approximation are:

Where

After the maximum number of iterations through Eq. (22), most

elements a of atend to infinity. According to the mechanism of ARD,

ARD prior prunes the corresponding hidden neurons when the

elements of w associated with a tend to zero. The final probability

distribution P(tnew|Snew,wMP ) is predicted by using sparse weight based

on

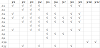

The above formulation is designed only for binary classification. For multi-classification and producing probabilistic output, oneversus- all strategy is usually employed to deal with multi-classification problems. One-versus-all strategy constructs a group of classifiers lclass = [C1,C2,…,Cd] in a d-label classification problem. The one-versusall strategy is simple and easy to implement. However, it generally gives a poor result [10,11] since one-versus-all does not consider the pairwise correlation and hence induces a much larger indecisive region than pairwise coupling strategy (using one-versus-one). In pairwise coupling strategy, it also constructs a group of classifiers lclass= [C1,C2,…,Cd] in a d-label classification problem, but each Ci = [Ci1,…Cij,…,Cid] is composed of a set of d-1 different pairwise classifiers Cij, i≠j. Since Cij and Cji are complementary, there are totally d(d-1)/2 classifiers in lclass as shown in Figure 1. To solve the multiclassification as well as produce probabilistic output, pairwise coupling strategy is adopted forSBELM. The strategy combines all the output of every pair of classes to re-estimate the overall probability for a new instance. In this research, the following simple pairwise coupling strategy for multiple-fault diagnosis is proposed. The probability of every ρi is calculated as

where nij is the number of training vectorswith eitherith orjth labels, ands is an unseen case. Hence, the probability can be more accurately estimated from ρij=Cij (s) because the pairwise correlation betweenthe labels is taken into account.

4. Experiments

4.1 Design of experiments

The FSBELMwas implemented byMatLab.As the output of each FSBELM classifier is a probability vector. Some well-known probabilistic diagnostic methods, such as fuzzy probabilistic neural network (FPNN) [13] and fuzzy probabilistic support vector machine (FPSVM) were also implemented with MatLab in order to compare their performances with FSBELM fairly. For the structure of the FPSVM, the kernel wasradial basis function. In terms of the hyperparameters in FPSVM, the hyperparameterscand σ were allset tobe 1 according to usual practice.Regarding the network architecture of the FPNN, there are 11 input neurons, 15 neurons with Gaussian basis function in the hidden layer and11output neurons with sigmoid activation function in the output layer.

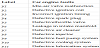

In total, 308 symptom vectors were prepared by collecting the knowledge from ten experienced mechanics. The whole data was then divided into 2 groups: 77 as test dataset and 231 as training dataset. All engine symptoms were fuzzified using the fuzzy memberships of Eqs. (2)~(12) and produced the fuzzified training dataset TRAIN and the fuzzified test dataset TEST. For training FSBELM and FPSVM, each algorithm constructed 11 fuzzy classifiers fi, i Є {1,2,..,d, & d=11}, based on TRAIN. The training procedures of FSBELM and FPSVM are shown in Figure 2, whereas the procedure for FPNN is not presented in Figure 2, because it is a network structure instead of individual classifier.

4.2 Multiple fault identification

The outputs of FPNN, FPSVM and FSBELM are probabilities, so a simple threshold probability can be adopted todistinguishtheexistence of multiple faults. According to reference [13], the threshold probability was set to be 0.8. The whole fault identification procedure is shown below.

- Input x = [x1, x2,…, x11] into every classifier fiand FPNN. Each fiand the output neurons of FPNN could return a probability vector ρ= [ρ1, ρ2, …, ρ11]. ρi is the probability of the ith fault label. Where x is a test instance and ρis the predicted vector of engine faults.

- The final classification vector y = [y1, y2,…, y11]is obtained based onEq. (24).

The above steps are equivalent to a defuzzificationprocedure. The entire fault diagnostic procedures of FSBELM and FPSVM are depicted in Figures 3 whereas the procedure of FPNN is not shown in Figure 3, because it uses an entire network to predict the outputs, but the fault identification procedure usingthe threshold is the same.

4.3 Evaluationmeasure

F-measure is mostly used as performance evaluation for information retrieval systems where a document may belong to a single or multiple labels simultaneously, which is very similar to the current application in which the enginefault is a multiplefault problem. The F-measure is defined in Eq. (25) by referring to [12]. The larger the F-measure value, the higher the diagnosis accuracy.

4.4 Experiment results and evulation

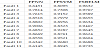

The overall F-measure of predicted faults over TEST is shown in Table 4. All the results were run using a PC with Intel Core i5 @3.2 GHz and 4GB RAM onboard. The FSBELM has the best diagnostic performance and its F-measure is as high as 0.964. The F-measure indicates that FSBELM outperforms FPSVM and FPNN. The F-measure for each fault is shown in Table 5 where the F-measure for each fault of FSBELMishigher than that of FPNN and FPSVM. The reason of why FPNN gives poor performance is that the training data in this research is not large enough (231 only). The relatively low performance of FPSVM is due to the fact that its parameters (σ, c) may not be optimal. In fact, it is very difficult to determine the optimal parameters. On the other hands,FSBELM only needs to set the number of hidden node L to be 50. Table4also shows that FSBELM runs much faster than FPNN and FSVMunder the same TRAIN and TEST. So, FSBELM is a very promising approach for this application.

5. Conclusion

In this paper, FSBELM has been successfully applied to multiplefault diagnosis of the car engine. Moreover, FPNN, FPSVM and FSBELM have been compared to detect the car engine faults based on various combinations and degrees of symptoms. This research is the first attempt atapplying fuzzy logic to SBELM for engine multiplefault diagnosis and comparingthe diagnostic performance of several fuzzy classifiers.Experimental results show that FSBELMoutperforms FPSVM and FPNN in terms of accuracy, training time and diagnostic time. So, it can be concluded that FSBELM is a very promising approach for engine multiple fault diagnosis.

Competing Interests

The author declare that there is no competing interests regarding the publication of this article.

Acknowledgments

The author would like to thank the support from Prof. Chi-man Vong, Department of Computer Science, University of Macau, and Mr. Jiahua Lou who develops the MatLab toolbox of SBELM.